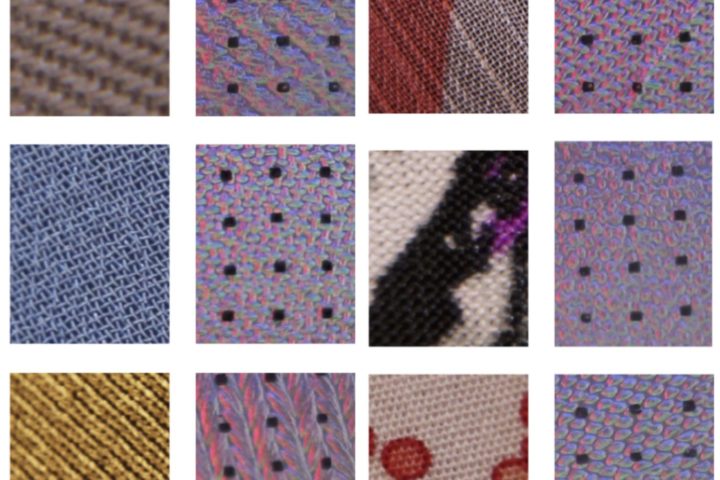

Sharing features through vision and tactile sensing

Vision and touch are two important modes of sensing for humans and they offer complementary information for sensing the environment.

Project team members Shan Luo, Tony Cohn and Raul Fuentes (University of Leeds), and external collaborators Wenzhen Yuan and Edward Adelson (Massachusetts Institute of Technology) have been working to equip robots with a similar multi-modal sensing ability to achieve better perception.

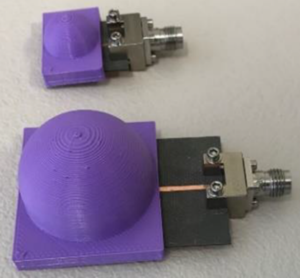

They have proposed a novel framework for learning joint latent space shared by two modes of sensing. The framework was tested using camera vision and tactile imaging data for cloth, and the learned features from each set of data were paired using maximum covariance analysis. Both vision and tactile sensing modes can achieve more than 90% recognition accuracy. However, using the new framework, the perception performance can be improved by employing the shared representation space, compared to learning from just one set of data.

The results will be presented by Shan Luo at the 2018 IEEE International Conference on Robotics and Automation, (May 21-25, 2018, Brisbane, Australia) and will be included in the conference proceedings.

Find our other project publications here.